Mobile LLM Caching: When to Refresh, When to Reuse

How I built a TTL-based cache to cut API costs without sacrificing UX in a real-time coaching app

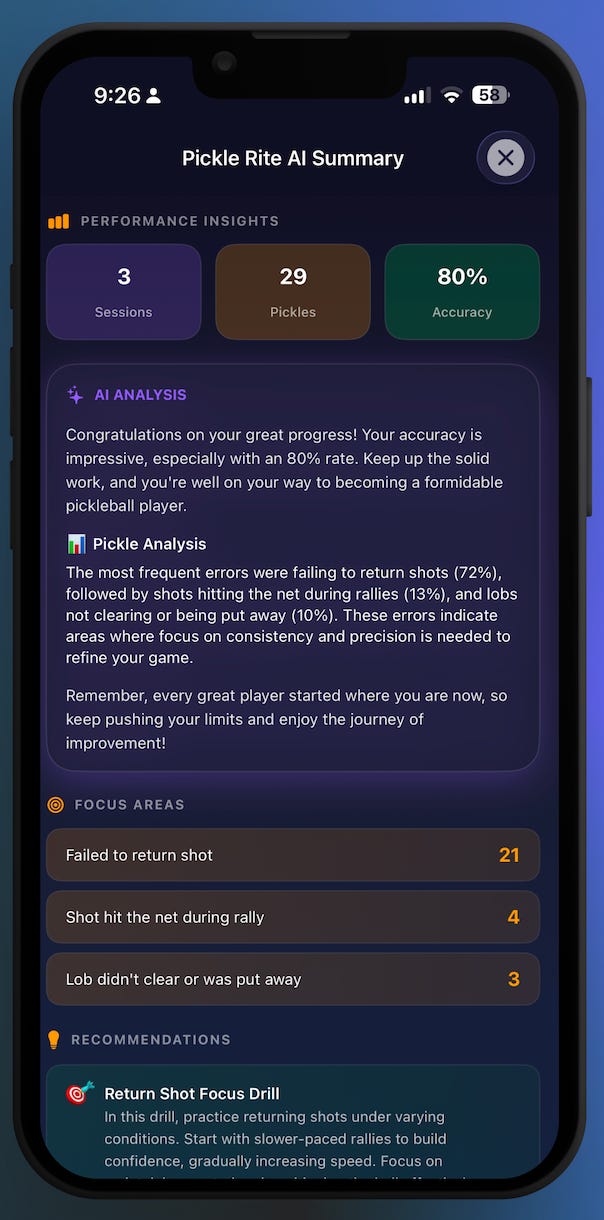

I'm building a pocket coach for pickleball players. The iOS and Apple Watch app tracks your sessions — every serve, dink, volley, and fault — then uses AI to analyze your patterns and tell you exactly what to practice next.

The AI features are powered by Apple’s on-device FoundationModels framework, but the caching patterns I’m about to share work the same whether you’re calling OpenAI, Anthropic, Gemini, or any other LLM. The core problem is universal: AI responses are expensive to generate, and most of the time the underlying data hasn’t changed.

Here’s how I solved it.

The Problem: Two AI Features, Zero Caching

PickleRite has two distinct AI-powered features that needed caching:

1. AI Performance Summary — When a player opens the RiteAI tab, the app generates a full analysis: overall insight, error breakdown, personalized drill recommendations, and a motivational closing. This is a structured response using Apple’s @Generable protocol

@Generable

struct AISummary {

@Guide(description: "Overall performance insight and analysis text")

let overallInsight: String

@Guide(description: "Error breakdown analysis with specific insights for each error type")

let errorAnalysis: String

@Guide(description: "Array of 2-3 personalized drill recommendations with titles and descriptions")

let recommendations: [Recommendation]

@Guide(description: "Motivational closing message")

let motivationalClosing: String

}

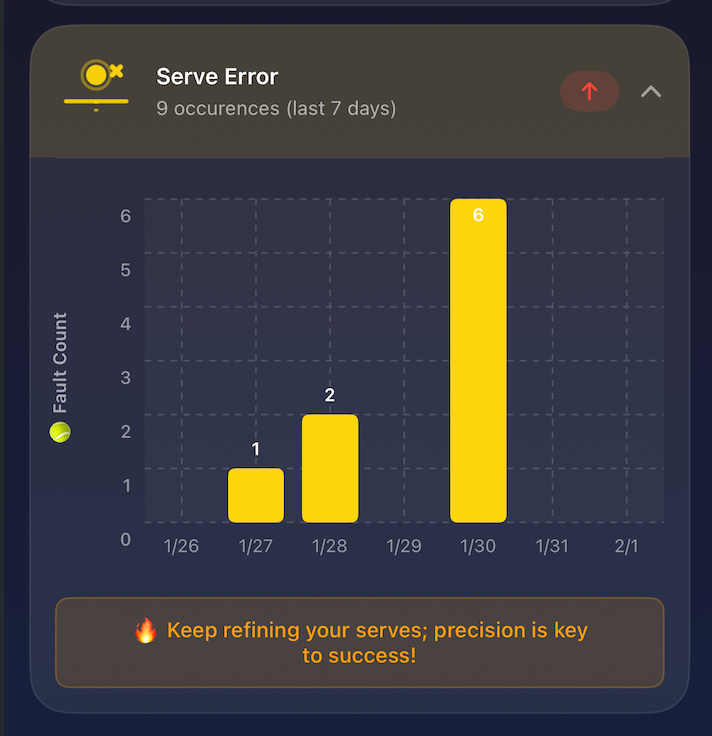

2. Focus Messages — In the Analytics tab, each error type card (serve errors, dink mistakes, net errors...) shows a short AI-generated coaching message. If you’re improving at dinks, it congratulates you. If your serve errors spiked, it tells you what to focus on. You can see the messages in the orange rectangle in below screenshots.

Without caching, opening the Analytics tab generated **10 separate AI calls** — one per error type. Every single time. On top of the summary call. That’s 11 AI generations for data that changes only when a player logs a new session.

The Architecture: Two Stores, Two Cache Strategies

My first instinct was to add cache models to the existing SwiftData store. Bad idea. PickleRite already had beta users with session data in default.store. Adding new @Model classes to that store would require a SwiftData migration — and for what? Disposable cache data that I’d happily nuke.

So i split in to two stores:

var sharedModelContainer: ModelContainer = {

let appGroupIdentifier = "group.com.xxx.PickleRite"

guard let containerURL = FileManager.default.containerURL(

forSecurityApplicationGroupIdentifier: appGroupIdentifier

) else {

fatalError("Failed to get App Group container URL")

}

// Keep existing default.store for Session data (preserves beta tester data!)

let defaultStoreURL = containerURL.appendingPathComponent("default.store")

// Separate cache.store for new CachedGeneratedMessage (no migration needed)

let cacheStoreURL = containerURL.appendingPathComponent("cache.store")

// Main store: Session + CachedAISummary (KEEPS existing default.store)

let sessionConfig = ModelConfiguration(

"DefaultStore",

schema: Schema([Session.self, CachedAISummary.self]),

url: defaultStoreURL

)

// Cache store: CachedGeneratedMessage (NEW separate store, no migration)

let cacheConfig = ModelConfiguration(

"CacheStore",

schema: Schema([CachedGeneratedMessage.self]),

url: cacheStoreURL

)

return try! ModelContainer(

for: Session.self, CachedAISummary.self, CachedGeneratedMessage.self,

configurations: sessionConfig, cacheConfig

)

}()

````default.store` holds Session data and CachedAISummary (the full performance analysis cache). This store already existed, and adding CachedAISummary alongside Session was a safe migration.

`cache.store` holds CachedGeneratedMessage (the per-error-type coaching messages). Brand new store, no migration complexity. If something goes wrong, I can delete it entirely without touching a single user session.

The lesson: Treat AI cache as disposable infrastructure. Don’t let it entangle with your core data.

Smart Cache Keys: Why TTL Alone Doesn’t Work

Here’s where caching AI responses gets interesting. Traditional API caching uses time-based expiry: cache for 5 minutes, then refresh. But AI responses aren’t like weather data or stock prices — they should be valid **as long as the underlying data hasn’t changed**.

A player might not log a session for three days. That cached analysis is still perfectly valid. But if they log a new session at lunch, the cache from this morning is instantly stale — even though it’s only 4 hours old.

Strategy 1: Session Fingerprinting (for AI Summaries)

The AI Summary cache stores the IDs of every session that was included in the analysis. On cache lookup, I compare those IDs against the current sessions for that date:

func getCachedSummary(

for analysisDate: Date,

userId: UUID?,

currentSessionIds: [UUID]

) -> CachedAISummary? {

let calendar = Calendar.current

let startOfDay = calendar.startOfDay(for: analysisDate)

let endOfDay = calendar.date(byAdding: .day, value: 1, to: startOfDay)!

var descriptor = FetchDescriptor<CachedAISummary>(

predicate: #Predicate { cache in

cache.analysisDate >= startOfDay &&

cache.analysisDate < endOfDay

},

sortBy: [SortDescriptor(\.createdAt, order: .reverse)]

)

descriptor.fetchLimit = 1

let caches = try context.fetch(descriptor)

guard let mostRecent = caches.first else { return nil }

// The key check: do the sessions match?

let sortedCurrent = currentSessionIds.sorted()

let sortedCached = mostRecent.sessionIds.sorted()

if sortedCurrent != sortedCached {

return nil // Sessions changed — cache is invalid

}

return mostRecent

}Added a session? Cache miss. Deleted one? Cache miss. Same sessions as before? Cache hit — instant UI, no AI call needed.

Strategy 2: Data Hash (for Focus Messages)

Focus messages are more granular — one per error type. Instead of tracking session IDs, I hash the actual error data:

private func createSessionDataHash(sessionData: [SessionErrorData]) -> String {

let dataString = sessionData

.map { "\($0.date.timeIntervalSince1970)-\($0.errorCount)-\($0.sessionCount)" }

.joined(separator: "|")

return String(dataString.hashValue)

}Same sessions but different error counts? Different hash. Cache miss. This catches edge cases that session ID comparison would miss — like when a session is edited rather than added.

The principle: Your cache key should reflect what the AI actually sees, not when you last called it.

Cache Invalidation: The Part Everyone Dreads

Cache invalidation is famously one of the two hard problems in computer science. Here’s my approach: **invalidate on data change, not on a timer.**

When a player finishes a session (on iPhone or Apple Watch), the app posts a notification. When they delete a session, same thing. These notifications trigger cache invalidation:

func setupAICacheInvalidation(context: ModelContext) {

NotificationCenter.default.addObserver(

forName: .didSaveSession, object: nil, queue: .main

) { [weak self] _ in

self?.invalidateAICache(context: context)

}

NotificationCenter.default.addObserver(

forName: .didDeleteSession, object: nil, queue: .main

) { [weak self] _ in

self?.invalidateAICache(context: context)

}

}The invalidation itself is targeted — it only wipes caches for the affected date, not everything:

private func invalidateAICache(context: ModelContext) {

// Invalidate the AI summary for today

let summaryCacheManager = AISummaryCacheManager(context: context)

summaryCacheManager.invalidateCache(

for: recentPerformanceDate,

userId: userProfile.supabaseUserId

)

// Invalidate all error type message caches

let messageCacheManager = GenerateMessageCacheManager(context: context)

let errorTypes: [ErrorType] = [

.serveError, .lobMiss, .missedReturn, .kitchenFault, .netError,

.dinkError, .volleyError, .smashError, .communicationError,

.slowNetTransition

]

for errorType in errorTypes {

messageCacheManager.invalidateCache(

for: recentPerformanceDate,

errorType: errorType,

userId: userProfile.supabaseUserId

)

}

}On top of this, I have two safety nets:

- Stale threshold (10 minutes): Even if session IDs match, a cache older than 10 minutes can be refreshed in the background while showing the cached version immediately.

- Retention cleanup (30 days): Old caches get cleaned up automatically before each save to prevent unbounded storage growth.

What I’d Do Differently

A few reflections after living with this system for a couple of months:

SwiftData might be overkill for cache-only data - SwiftData’s migration system is designed for long-lived user data, not disposable caches. A simple file-based cache (JSON files keyed by a hash) would be simpler and avoid the schema management overhead entirely.

Hash the full prompt as the cache key - My current approach uses session IDs and data hashes — which are proxy signals for “did the prompt change?” A more universal approach: hash the actual prompt string you’re sending to the AI. If the prompt is identical, the response will be equivalent. This also makes the cache provider-agnostic.

Consider cache warming - Right now, caches are only populated when the user navigates to a screen. A background task that pre-generates caches after a session is saved would make every first visit instant, not just repeat visits.

The Three Principles

If you take anything from this post, it’s these three ideas:

Data-change invalidation beats time-based TTL - Your AI cache should live as long as its input data is unchanged. A 3-day-old cache with matching data is more valuable than a 5-minute-old cache from stale data.

Separate cache storage from user data - AI caches are disposable. User sessions are not. Don’t let them share migration complexity. Use a separate store, a separate file, or at minimum a separate table.

Cache first, generate second, save third - The pattern is always the same: check cache → return if valid → generate if not → save for next time. Whether it’s OpenAI, Anthropic, Apple FoundationModels, or a local LLM — the flow doesn’t change.

If you play pickleball and want AI-powered coaching insights from your sessions, check out PickleRite on the App Store . It tracks your mistakes on Apple Watch, syncs to iPhone, and uses on-device AI to tell you exactly what to work on next.